Azure AI Foundry

Foundry is designed to support all AI development related to Azure. This means it's not intended for managing tools like Microsoft 365 Copilot, but when it comes to Azure-based solutions, Foundry provides a comprehensive set of tools.

Lifecycle Support for your AI Solution

AI isn’t a single cloud service, it’s a collection of different technological components used to build intelligent solutions. Alongside foundation models like GPT-5, this can include search indexes, ready-made AIaaS tools, and other supporting resources.

Foundry introduces an AI Project model that brings all related elements of a solution into one cohesive unit. This structure simplifies managing components, access control, and cost tracking. It also allows for resource sharing across projects, for example, a single OpenAI Service instance can serve multiple projects.

From a developer's perspective, Foundry offers useful technical abstractions, such as accessing different language models through a unified interface. This makes switching between models technically easier and more efficient.

Foundry Centralizes AI Infrastructure

Foundry is also designed to support an AI solution throughout its entire lifecycle. The overall system is built around, among other things, the following capabilities:

- Model availability: Foundry offers users thousands of language and other models from both Microsoft and Hugging Face. Developers can choose which ones to deploy. Models like GPT‑5, DeepSeek, Mistral and thousands of others are equally accessible.

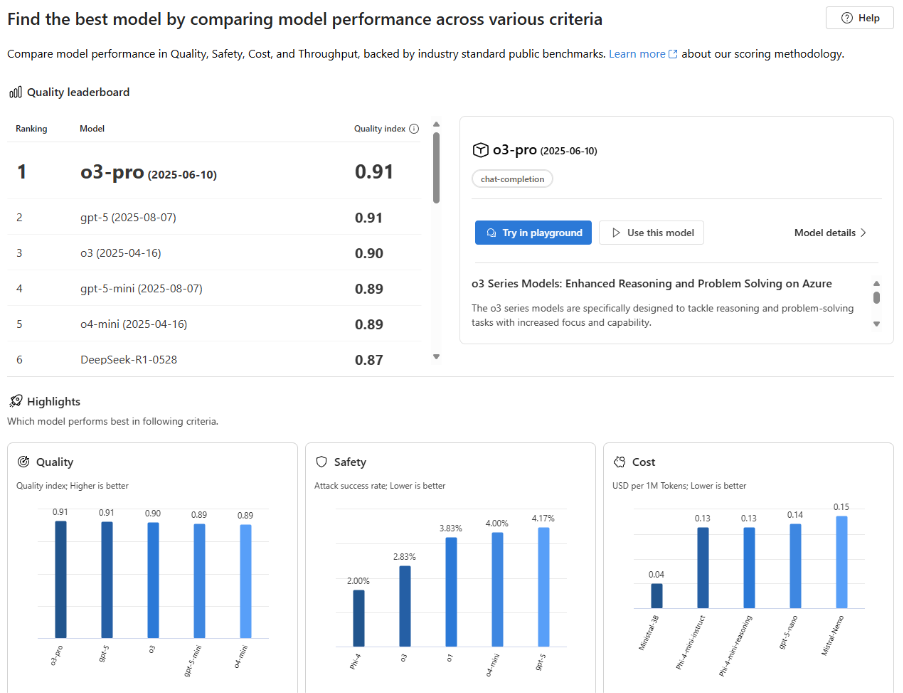

- Model comparison: Within Foundry you’ll find extensive benchmarking data that lets you investigate how different models perform in various scenarios. This helps developers pick the most appropriate model for each case. The newest, most advanced model might give the best results but could also be unnecessarily expensive and slow. With model comparison you can find the simplest model that delivers adequate quality.

- Model testing: Foundry supports running models in a test bench, for example by feeding specific inputs through different models and examining how well or appropriately they respond. The same testing tools can be used to examine content‑security boundaries, for example by testing how well a model adheres to given behavioural rules. Testing also matters in the ongoing lifecycle of the project: for instance when upgrading model versions it’s wise to perform careful testing so that a seemingly benign version upgrade doesn’t compromise result quality.

- Model deployment: Large general‑purpose models like GPT‑5 are always run in the cloud as shared resources. Smaller language models, for example Microsoft’s Phi, can also be run in your own copy. Foundry offers the option to deploy chosen models in dedicated environments. The basic idea is: whatever model you need, you can get it from Foundry at the click of a button whether as a shared service or even as a dedicated virtual machine.

- Model fine‑tuning: With Foundry you can also create fine‑tuned versions of models. Sometimes it is difficult to get a language model to behave exactly as you wish because its base training “pushes” it strongly. Fine‑tuning allows you to feed the model examples of how you want it to behave. This isn’t necessary in most projects, but when the need for control is significant and success is critical, fine‑tuning helps. In Azure AI Foundry you can perform fine‑tuning directly via the interface and of course also deploy the fine‑tuned models for your applications.

- AI governance support: Foundry includes features specifically designed to manage model usage. For example, you can test model behaviour against defined rules, and export the results to external AI‑governance systems.

Microsoft Learn - Security tools: AI Foundry also supports, for instance, content moderation (via Azure Content Safety) and attack‑defence measures. With techniques like “Prompt Shield”, Foundry can identify when someone attempts to override base instructions of the model. These safeguards can be flexibly configured for your application.

Developing AI logic for Applications

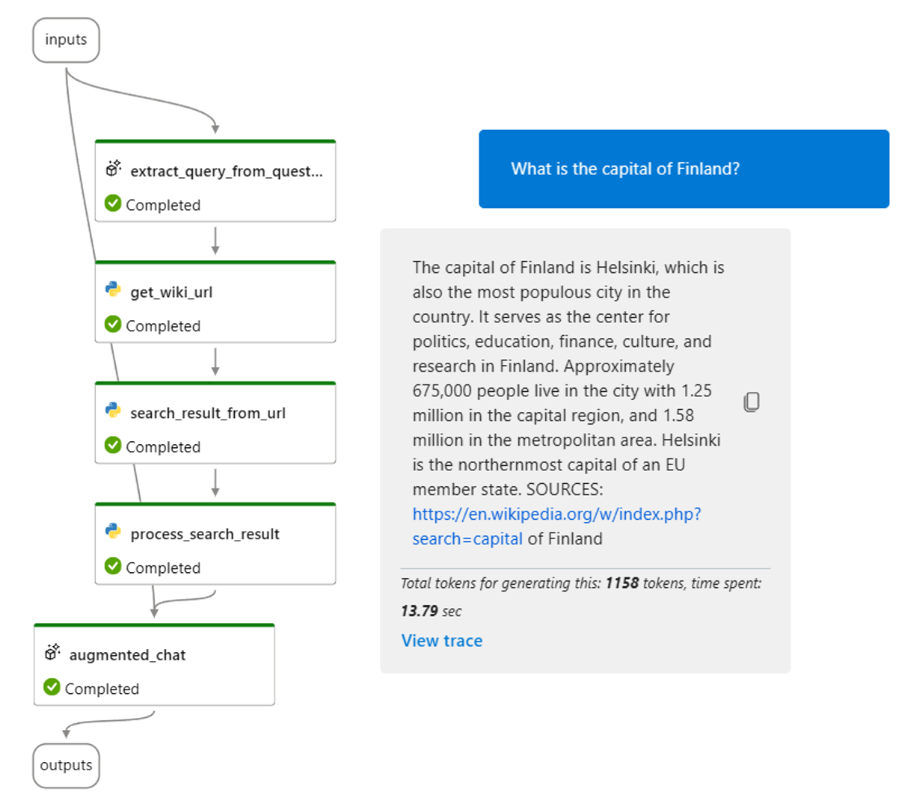

With Prompt Flow, you can design and test AI-based logic without any application development skills. The resulting flow can be exposed through a REST API for your actual applications to call - meaning the AI components can be maintained independently of the application itself.

Azure AI Agent Service, part of Foundry, allows you to build conversational agents and connect them to various knowledge sources using versatile tools. These agent implementations can be extended with custom applications, enabling integration with any tools or systems within your organization.

Foundry also provides rich features for monitoring and supervising agent behavior strengthening its overall lifecycle support.

The platform includes access to Microsoft’s prebuilt AI services, from computer vision and speech generation to form recognition. These services can be integrated into your own solutions, and in many cases, tested directly through Foundry’s user interface portal.

Built with a Developer-First Mindset

Microsoft Foundry is designed to be approachable, versatile, and thoughtfully built for developers. At its core is a recognition by Microsoft of a common challenge: experimentation with AI often leads to fragmented tech silos that are hard for IT to govern. This lack of control slows down the broader adoption of AI across the organization.

In an ideal setup, Foundry resolves these issues. It centralizes AI resources, streamlines access and cost management, and simplifies application development with tools that support the full lifecycle of AI solutions.

Technically, Foundry is fast to deploy but we strongly recommend involving business stakeholders early on. The rollout is a natural opportunity to revisit your organization's AI policies and oversight practices. Foundry can serve as a foundation for lifecycle planning across all your AI applications.

When planning your Foundry setup, focus on existing competencies and a clear AI roadmap. We're happy to support you with both deployment and ongoing use..